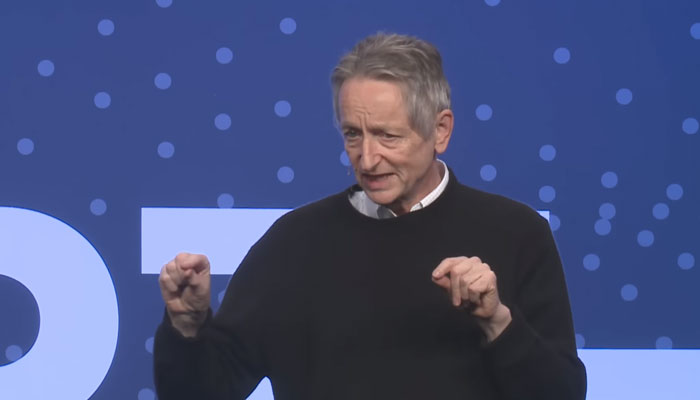

Why did Godfather of AI Geoffrey Hinton quit Google?

Geoffrey Hinton had announced he was parting ways with Google to speak freely about dangers of AI

May 03, 2023

Geoffrey Hinton — also known as the Godfather of AI — who parted ways with Google on Monday said that the current pace of the technology would eventually take over humans as it would learn "ways of manipulating people".

The Godfather of AI, 75, while speaking in an interview with CNN said he had to "blow the whistle" to let technology developers know what are the dangers of AI.

He was cognisant that machines are getting smarter than people at an unprecedented pace.

Hinton, who had helped in developing neural networks for AI being used in numerous technology products, said: "I'm just a scientist who suddenly realised that these things are getting smarter than us."

"I want to sort of 'blow the whistle' and say we should worry seriously about how we stop these things getting control over us."

Geoffrey Hinton was in the news as he announced that he had parted ways with Google to speak freely about the dangers of AI.

"I console myself with the normal excuse: If I hadn't done it, somebody else would have," Hinton told the New York Times.

He warned that AI would create a world where many will "not be able to know what is true anymore," adding special emphasis to the jobs that would be replaced by the technology.

"The idea that this stuff could actually get smarter than people — a few people believed that," the Godfather of AI said.

Hinton said: "If it gets to be much smarter than us, it will be very good at manipulation because it will have learned that from us, and there are very few examples of a more intelligent thing being controlled by a less intelligent thing."

"It knows how to programme so it'll figure out ways of getting around restrictions we put on it. It'll figure out ways of manipulating people to do what it wants."

In March, there was also an open letter written and signed by a large number of experts including tech CEOs and experts highlighting the dangers of AI, saying it poses “profound risks to society and humanity.

Tech-billionaire and Tesla CEO Elon Musk had also announced that he is building software way more potent than ChatGPT 4.

Apple co-founder Steve Wozniak — one of the signatories of the letter — said Tuesday: "Tricking is going to be a lot easier for those who want to trick you. We’re not really making any changes in that regard – we’re just assuming that the laws we have will take care of it."

Apple co-founder also suggested "regulating AI" while talking to CNN.

Hinton, who was not part of the letter, said: "I don’t think we can stop the progress. I didn’t sign the petition."

"We should stop working on AI because if people in America stop, people in China wouldn't," Hinton added.

However, he said: "It's not clear to me that we can solve this problem. I believe we should put a big effort into thinking about ways to solve the problem. I don’t have a solution at present."